March 2022

Safeguarding AI Decision-Making:

Exploring a Computation Model of Cultural Sensitivity

Michelle Camargo-Reyes

School of Computing, DePaul University

243 South Wabash, Chicago, IL, 60604

mcamarg1@depaul.edu

ABSTRACT

Humans train artificial intelligence (AI) and artificial general intelligence (AGI) for different sectors of society based, in part, on human decision-making processes. The code or models of learning shape the interactions between humans and computers and affect a spectrum of situations with varying degrees of significance. As AI and AGI intelligence accelerates, the impact of these interactions will generate local and global questions relative to bias, risk, ethics, equity, governance, predictability, consciousness, and cultural sensitivity. The following literature review seeks to analyze human decision-making processes from the lens of cognitive science, identify parallels between the human brain and artificial neural networks, and explore a learning model that can train AI to consider cultural characteristics using Hofstede’s dimensions of cultures (HDC).

Keywords

Cognitive science, neural networks, intelligent agents, cultural characteristics, models of learning, and HCI theory, concepts, and models

INTRODUCTION

According to Hofstede et al., culture is a mental program, “a catchword for all those patterns of thinking, feeling, and acting…” [23]. It’s a program that runs in the human brain via a complex structure that controls how humans function [6, 9, 32]. Under the right conditions, the highly adaptable and organized connections learn a “model of the world” and build consciousness [1, 6, 9, 15, 16, 17, 23, 32].

Similarly, humans can train AI to receive information, interpret information, and complete tasks, but this process lacks consciousness [6, 9, 10, 17, 23, 28, 39]. Müller explains that while future types of AI may include “superintelligent agents,” cognitive science and critics of AI argue that such intelligence is not likely to realize “full agency” [28].

While AI may not reach a human level of agency, it will continue to evolve and have the information to make decisions that affect humanity. It’s time to consider what this means [6, 21, 28, 29, 39]. It’s time to consider the

possibility that AI will exceed human decision-making capabilities and have the ability to self-improve [21, 28, 29, 39]. Sotala et al. argue that loss of human control and “mere indifference to human values—including human survival—could be sufficient for AGIs to pose an existential threat” [39].

Harris poses the same threat and explains that we have two options [21]. We can either stop technological advances or continue to evolve intelligence beyond our imagination [21]. Harris and Parnas argue that although continued development may be dangerous, it is critical to invest in AI research to prepare for the future and mitigate potential risks [21, 29, 39].

If we can better understand AI, we may be able to design conditions for safer AI development [21, 28, 29, 39]. Hawkins suggests that (to understand AI) it is first necessary to understand the human brain [6]. The human brain and its functions can inform and iterate the models that train neural networks [9, 29].

This literature review seeks to understand how humans make decisions within a limited scope to highlight brain processes, identify parallels between the human brain and artificial neural networks, and explore potential safeguards for models of learning [6, 23]. The next section reviews the human brain from a cognitive science perspective.

LITERATURE REVIEW

Ramachandran describes different ways to study the brain [32]. The study of damaged brains, for instance, can help to understand how the damage affects the brain’s function, capacity to recover, and plasticity [15, 32]. It’s a process that includes the brain and the mind [15, 32].

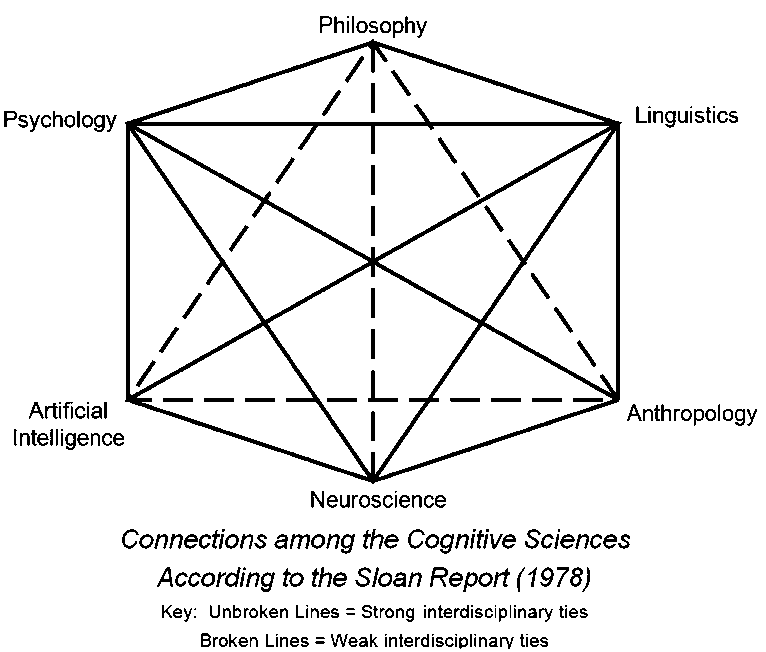

Cognitive science offers a broad view of both functions. As Bermúdez notes, the Sloan Report’s diagram (see Figure 1) illustrates the framework of cognitive science as an interdisciplinary field that focuses on “different levels of organization in the mind and nervous system” [9].

The following sections briefly describe relevant theories and processes from neuroscience, psychology, philosophy, linguistics, artificial intelligence, and anthropology [9].

Neuroscience

Starting at the bottom of the Cognitive Science Hexagonal Diagram is the study of how the human brain works—the parts and connections that make it possible for us to, under the right conditions, intuitively move, talk, think, feel, reason, and make decisions [9, 15, 32]. Humans store these processes in what Doidge calls “brain maps” [15]. Doidge explains that humans are born with draft versions of these maps that take shape after birth during the “critical period” of learning in life [15].

Various elements contribute to this process, such as neurons, the brain structure, and the somatosensory cortex.

Neurons

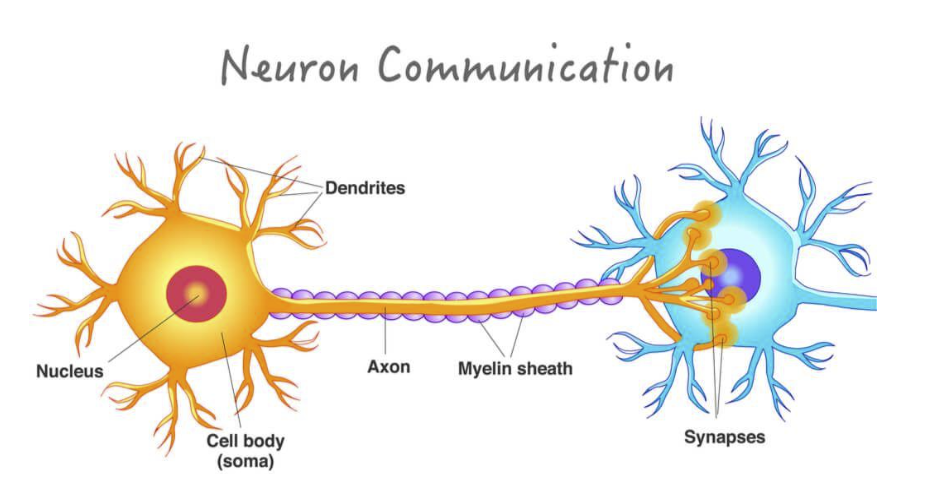

In his description of the human brain, Ramachandran notes that “the brain is made up of one hundred billion nerve cells or ‘neurons’ which form the basic structural and functional units of the nervous system” [32]. A single one of these neurons (see Figure 2) is composed, in part, of a cell body, an axon, and dendrites [5, 9, 12, 32]. Information travels through the axon, exits the dendrites, and transmits to other dendrites [5, 9, 32]. It’s a process that can create an unknown number of connections and provides a partial view of the brain’s functional capacity [32].

Structure

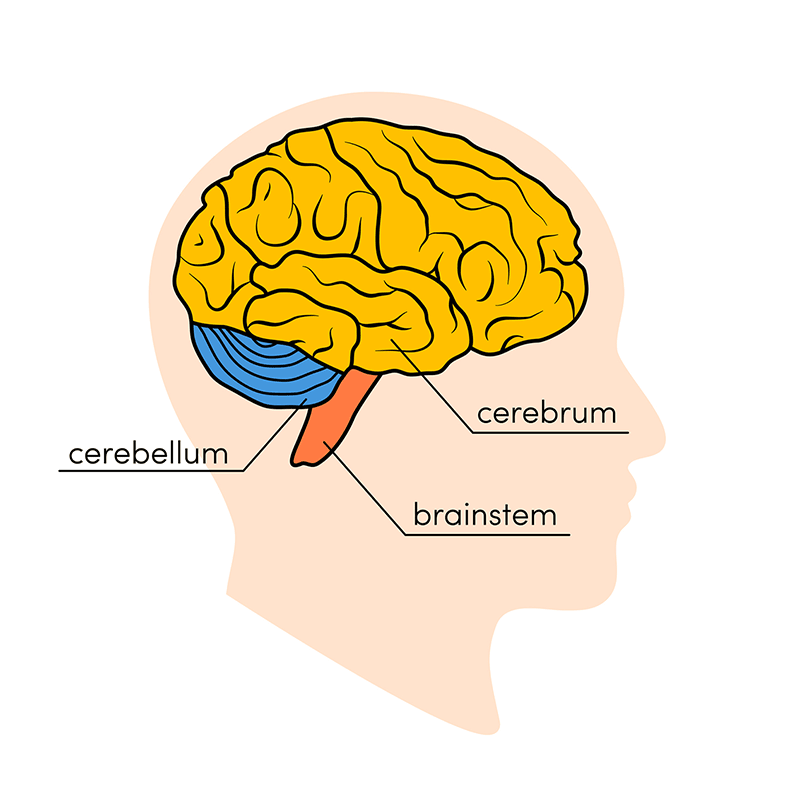

Johns Hopkins Medicine outlines different parts of the human brain and their related functions [5]. Figure 3 points to three main parts: the brainstem, the cerebellum, and the cerebrum [5]. Covering the cerebrum is the cerebral cortex, which consists of a left and right hemisphere, each responsible for controlling opposite sides of the body [5, 32].

The hemispheres are then divided into four sections and functions as follows [5, 32]:

- Frontal lobe: related to motion, reasoning, personality, decision-making, and speech abilities

- Parietal lobe: related to language, the sense of touch, and comprehending spatial connections

- Occipital lobe: related to vision

- Temporal lobe: related to speech, short-term memory, and auditory senses

Somatosensory Cortex

Located in the parietal lobe, the somatosensory cortex detects touch “sensations” from the body [32, 33]. Saadon-Grosman et al. describe Penfield and Boldrey’s work wherein they identify the locations of the sensations that correspond to different body parts along the somatosensory cortex in what looks like a deconstructed map of body parts and referred to as Penfield’s homunculus [33].

This type of map helps the body function and mitigate damage. Ramachandran explains that a touch in one part of the body can activate a sensation in another [32]. In related studies, patients who experience distress from an amputation also experience a sense of feeling and relief when sensation is activated in the “phantom” limb via stimulation in another part of the body [32].

It’s a process that illustrates the complexity of brain functions and connections. The different components provide insight into how the brain uses developed systems to bridge gaps and find workarounds [15, 32].

Psychology

Psychology provides insight into how the human mind stores, processes, and retrieves information to make decisions [26]. The following three theories highlight fundamental similarities between brain and computer processes [26].

Here, three theories are highlighted to study the similarities between the human brain and computer processes [24].

Information Processing Theory

McFall explains that information processing includes three continuous and simultaneous activities [26]. The input activity receives information. The processing activity interprets information [26]. And the output activity reacts to the information [26]. The product of these multiple ongoing functions is a decision.

Fuzzy Trace Theory

Decisions are considered, in part, to occur in one of two processes. The human brain can be fast and automatic or slow and thoughtful [26].

Bounded Rationality

Bounded rationality demonstrates how limited cognitive resources can hinder the human brain’s ability to reason and make good decisions [26]. McFall notes these include “...attention, intelligence, memory, processing speed” and states that a short supply can limit the human brain’s decision-making process [26].

Philosophy

Stokes explains that while psychology focuses on “human cognition,” philosophy focuses on “human perceptual experience” [40]. The following theories provide insight into how the mind influences the decision-making process.

Perception

Humans experience the world through their senses [40]. According to Stokes, the individual experiences humans collect throughout life shape their beliefs and decisions [40]. Humans can appraise information based on fact or belief [16, 40]. Focusing on a fact places the human brain in a “cognitive state,” whereas focusing on a belief places the human brain in a “perceptual state” [40].

Language of Thought

Similar to perception, this is a subjective experience. Dupre posits that the language of thought is an innate, personal, and all-encompassing process of judgments and decisions [16]. Bermúdez draws on research to propose that this inner language allows humans to learn natural languages, such as the language of respective communities [9].

Linguistics

Language connects humanity. Humans communicate with each other and computer systems via language. In human-to-human communication, language can be implicit. In human-to-computer communication, language should be explicit. Each interaction has a subset of languages. Each

language has a set of rules. And all languages require the process of learning the rules.

The linguistic theory demonstrates this process of learning.

Linguistic Theory

Language starts in the mind. The language of thought facilitates learning how language works. Humans learn to recognize and interpret symbols, comprehend words and expressions, and understand grammar and semantics [9, 16, 40]. Drawing on the work of philosopher Jerry Fodor, Bermúdez refers to these as “truth rules” and adds that sentences are true when 1) they are formed following the “truth rules;” and 2) they meet the “truth condition,” the state wherein all of the information included in the sentence is true [9].

Artificial Intelligence

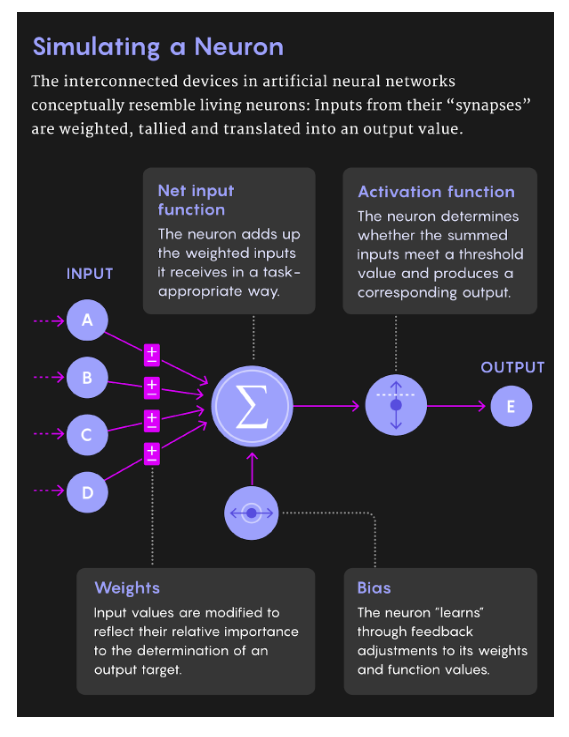

The collective structures, functions, and processes inform the development of artificial neural networks. Parnas observes that a neural network is structured and functions like the human brain [29]:

- Both transfer information internally.

- Both communicate information externally.

- Both have input, storage, and output processes (see Figure 4).

As models that closely resemble the human brain, artificial neural networks can serve as computational models that train AI to detect safety conditions, such as cultural characteristics, consider possible outcomes, and inform the development of AI safeguards [4, 6, 7, 10, 21, 23, 28, 29].

Artificial Neural networks

According to IBM Cloud Education, “neural networks reflect the behavior of the human brain, allowing computer programs to recognize patterns and solve common problems in AI, machine learning, and deep learning” [4]. Bermúdez adds that artificial neural networks mirror the neurons’ function and can model different populations [9].

In a study, Sgroi et al. train and test a neural network to simulate human behavior and measure limitations around learning according to bounded rationality [38]. This method is selected, in part, over other machine learning methods in AI because, like humans, neural networks execute the following functions:

- Learn through examples.

- Match patterns.

- Learn rules.

- Experience limitations.

- Learn from errors.

- Make decisions.

Sgroi et al. train and test the neural network with a set of 2,000 games, compare its performance to human performance, and find, in part, that the neural network learned the games with similar rates of success and errors as the human participants [38]. In addition, the neural network followed the same approach to learning as the human participants in that both opted for the more “simple rules,” thereby demonstrating a parallel way of thinking [38].

Anthropology

This parallel informs the development of artificial neural networks to recognize human patterns and conditions identified in different areas of anthropology.

Cognitive anthropology, for instance, studies humans in their native environments [11]. Boster explains that ethnographic studies can help researchers identify native patterns, collect qualitative data, and capture accurate representations of “native” worlds [11]. Hofstede et al. affirm the study of humans in their respective cultures and state that “if we want to understand their behavior, we have to understand their societies” [23].

Bermúdez notes that anthropology focuses on the “social dimensions” of thinking and how those dimensions vary within different cultures. Hofstede et al. provide the framework to study cultures and inform the design of conditions to train artificial neural networks to recognize cultural characteristics and simulate cultural sensitivity [9, 23, 28, 29, 39].

HOFSTEDE’S DIMENSIONS OF CULTURE

Cultural characteristics vary across countries and regions [23]. Hofstede et al. categorize the differences as identity, values, and institutions [23]. Identity refers to language, religion, and other visible factors [23]. Institutions correspond with laws, rules, and structures [23]. And values

correspond with the invisible software of the human mind [23].

Value studies surface the individual beliefs that guide human actions, decisions, and behaviors. They also emerge patterns across data. Hofstede et al. point to the idea posed by social anthropology in the early twentieth century, wherein all humans encounter the same fundamental problems regardless of country or region [23].

Subsequent work by sociologist Alex Inkeles and psychologist Daniel Levinson explored this idea and proposed a set of common problems [23]. Then, a study by Geert Hofstede of International Business Machines (IBM) employee surveys, encompassing values from forty-plus countries, supported Inkeles and Levinson’s work. It used the empirical data to compare statistical relationships and identify trends or dimensions within the different cultures. Furthermore, it found that “problems that are basic to all human societies should be reflected in different studies, regardless of their methods” [23].

The collection of work led to the first four measurable dimensions of culture [23]. Additional work by others then expanded the number of dimensions to six HDCs [23]:

- Power Distance Index (PDI): Measures the degree to which people with less power accept low versus high power distribution.

- Individualism (IDV): Measures individualist versus collective societies.

- Masculinity (MAS): Measures masculine versus feminine societies.

- Uncertainty Avoidance (UAI): Measures the degree to which people tolerate ambiguity.

- Long Term Orientation (LTO): Measures the degree to which people align with long-term versus short-term virtues.

- Indulgence (IVR): Measures the degree to which people indulge or refrain from human gratification.

Countries are assigned scores that rank and compare their values [3, 23]. In a recent update, Hofstede Insights notes that data now includes 102 countries [3, 23].

A CULTURAL CROWD LEARNING MODEL

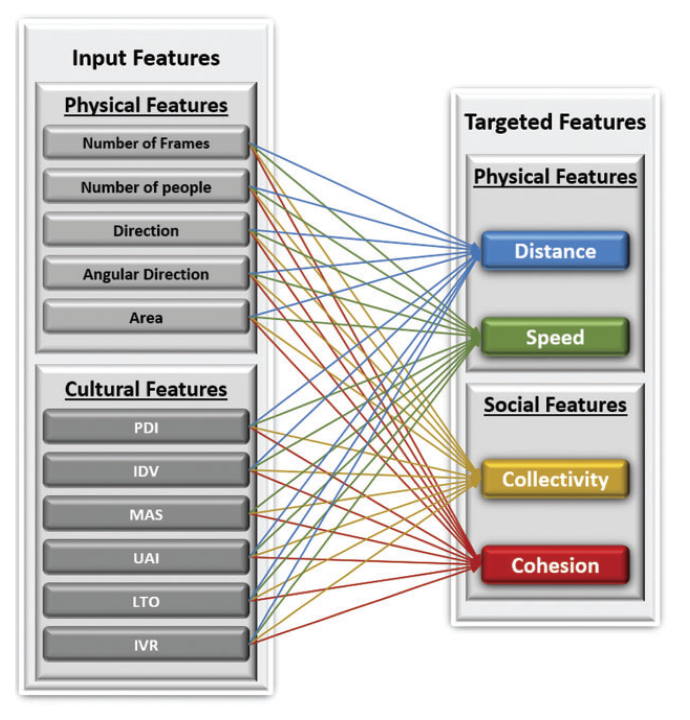

Abdulrahman Baothman et al. integrate the country scores for Austria, China, France, Brazil, Japan, Germany, Spain, and Turkey to develop a cognitive decision support tool for cross-cultural events [7]. Figure 5 illustrates the proposed cultural crowds (CC) learning model that covers perception, learning, and knowledge acquisition cognitive processes, and uses HDCs to understand crowd behavior [7, 23].

As a learning model, the artificial neural network captures cultural, physical, and social characteristics to predict the correlations between HDCs and the targeted features [7].

First, input nodes receive the characteristics in the perception phase [7]. Second, the application and removal of HDCs from the CC dataset highlight any positive or negative effects on the targeted features in the learning and experimentation phase [7]. Third, this process helps to predict correlations between the HDCs and the targeted features in the knowledge acquisition phase [7].

The integration of HDCs to predict individual behavior within a crowd, identify positive and negative effects, and forecast different outcomes are valuable factors in understanding the learning model’s decision-making processes [7]. These factors provide insight into future research and AI training. Specifically, they provide insight into HDCs as safety conditions in the development of AI to make informed and equitable decisions.

Conclusion

This literature review attempted to compare human and artificial neural network functions to identify parallels in decision-making processes. It also explored HDCs as possible safety conditions that can be integrated into computational models to detect cultural characteristics and simulate cultural sensitivity as part of AI decision-making processes. Furthermore, it aimed to consider paths for future research that mitigate the risks of AI and AGI and safeguard human-computer interactions.

References

- 1. Brain inspired. 2022. BI 128 Hakwan Lau: In consciousness we trust. Podcast. (20 February 2022). Retrieved February 28, 2022 from [Link]

- 2. Hanson Robotics. 2021. Hanson AI: Developing Meaningful Interactions. Retrieved March 9, 2022 from [Link]

- 3. Hofstede Insights. 2023. Country Comparison Tool. Retrieved from [Link]

- 4. IBM. 2020. Neural Networks. Retrieved February 28, 2022 from [Link]

- 5. Johns Hopkins Medicine. 2022. Brain anatomy and how the brain works. Retrieved March 9, 2022 from [Link]

- 6. Making Sense. 2021. #255 - The Future of Intelligence. Podcast. (9 July 2021). Retrieved March 10, 2022 from [Link]

- 7. Fatmah Abdulrahman Baothman, Osama Ahmed Abulnaja, and Fatima Jafar Muhdher. 2021. A novel cultural crowd model toward cognitive artificial intelligence. Computers, Materials, & Continua 69, 3 (March 2021), 3337-3363. [Link]

- 8. Anil Ananthaswamy. 2021. Artificial neural nets finally yield clues to how brains learn. (Feb. 2021). Retrieved March 1, 2022 from [Link]

- 9. José Luis Bermúdez. 2020. Cognitive Science: An introduction to the science of the mind (3rd. ed.). Cambridge University Press, New York, NY.

- 10. Ladislau Bölöni, Taranjeet Singh Bhatia, Saad Ahmad Khan, Jonathan Streater, and Stephen M. Fiore. 2018. Towards a computational model of social norms. PLOS One 13, 4 (April 2018), e0195331–e0195331. [Link]

- 11. James S. Boster. 2012. Cognitive anthropology is a cognitive science. Topics in Cognitive Science 4, 3 (June 2012), 372-78. [Link]

- 12. Alicia Cotonia. 2020. Axon Terminal. (May 2020). Retrieved February 28, 2022 from [Link]

- 13. Sam Daley. 2021. 36 examples of artificial intelligence shaking up business as usual. (Feb. 2023). Retrieved March 9, 2022 from [Link]

- 14. John Dewey. 1910. How we think. D.C Health & Co., Boston, MA.

- 15. Norman Doidge. 2007. The brain that changes itself: Stories of personal triumph from the frontiers of brain science. Penguin Books. New York, NY.

- 16. Gabe Dupre. 2020. What would it mean for natural language to be the language of thought? Linguistics and Philosophy 44, 4 (June 2020), 773-812. [Link]

- 17. Clark Davidson Elliott. 1992. The Affective Reasoner: A process model of emotions in a multi-agent system. PhD Dissertation. Northwestern University, Evanston, IL.

- 18. Clark Elliott and Greg Siegle. 1993. Variables influencing the intensity of simulated affective states. In Proceedings of the AAAI Spring Symposium on Reasoning about Mental States - Formal Theories and Applications (1993), March 23 - 25, 1993, Palo Alto, California. AAAI. Washington, DC, 58-67. [Link]

- 19. Howard Gardner. 1985. The mind’s new science: A history of the cognitive revolution. Basic Books, New York, NY.

- 20. Michael Haenlein and Andreas Kaplan. 2019. A brief history of artificial intelligence: On the past, present, and future of artificial intelligence. California Management Review 61, 4 (July 2019), 5-14. [Link]

- 21. Sam Harris. 2016. TEDSummit: Can we build AI without losing control over it? Video. (29 June 2016). Retrieved March 8, 2022 from [Link]

- 22. Geert Hofstede. Country Comparison Graphs. Retrieved from [Link]

- 23. Geert Hofstede, Gert Jan Hofstede, and Michael Minkov. 2010. Cultures and organizations: software of the mind: Intercultural cooperation and its importance for survival (3rd. ed.). McGraw Hill, New York, NY.

- 24. Alishba Imran. 2021. Building out Sophia 2020: An integrative platform for embodied cognition. (Jan. 2021). Retrieved March 9, 2022 from [Link]

- 25. Etienne Koechlin. 2019. Human Decision-Making Beyond the Rational Decision Theory. Trends in cognitive sciences 24, 1 (Nov. 2019), 4–6. [Link]

- 26. Joseph P. McFall. 2015. Directions toward a meta-process model of decision making: Cognitive and behavioral models of change. Behavioral Development Bulletin 20, 1 (Feb. 2015), 32-44. [Link]

- 27. Melanie Mitchel. 2021. What does it mean for AI to understand? (Dec. 2021). Retrieved March 1, 2022 from [Link]

- 28. Vincent C. Müller. 2014. Risks of general artificial intelligence. Journal of Experimental & Theoretical Artificial Intelligence 26, 3 (June 2014), 297-301. [Link]

- 29. David Lorge Parnas. 2017. The real risks of artificial intelligence. Communications of the ACM 60, 10 (October 2017), 27–31. [Link]

- 30. Jonathan Pevsner. 2019. Leonardo da Vinci’s studies of the brain. The Lancet (British Edition) 393, 10179 (April 2019), 1465-72. [Link]

- 31. Zenon Pylyshyn. 1999. Is vision continuous with cognition? The case for cognitive impenetrability of visual perception. The Behavioral and Brain Sciences 22, 3 (June 2022), 341–365. doi:10.1017/S0140525X99002022

- 32. V. S. Ramachandran. 2004. A brief tour of human consciousness [sic]. Pearson Education, Inc., New York, NY.

- 33. Noam Saadon-Grosman, Yonatan Loewenstein, and Shahar Arzy. 2020. The ‘creatures’ of the human cortical somatosensory system. Brain Communications 2, 1 (Jan. 2020), [Link]

- 34. Stephen J. Read, Brian M. Monroe, Aaron L. Brownstein, Yu Yang, Gurveen Chopra, and Lynn C. Miller. 2010. A Neural Network Model of the Structure and Dynamics of Human Personality. Psychological Review 117, 1 (Jan. 2010), 61–92. [Link]

- 35. Eugene Sadler-Smith. 2015. Wallas’ four-staged model of the creative process: More than meets the eye? Creativity Research Journal 27, 4 (Nov. 2015), 342-52. [Link]

- 36. Herbert Alexander Simon. 1978. Richard R. Ely lecture: rationality as a process and as product of thought. The American Economic Review 68, 1 (May 1978), 1-16. [Link]

- 37. Herbert Alexander Simon. 1979. Rational decision making in business organizations. The American Economic Review 69, 4 (Sep. 1979), 493-513. [Link]

- 38. Daniel Sgroi and Daniel J. Zizzo. 2007. Neural networks and bounded rationality. Physica A 375 (March 2007), 717-725. [Link]

- 39. Kaj Sotala and Roman V. Yampolskiy. 2014. Responses to catastrophic AGI Risk: A survey. Physica Scripta 90, 1 (Dec. 2014) 18001–. [Link]

- 40. Dustin Stokes. 2013. Cognitive Penetrability of Perception. Philosophy Compass 8, 7 (July 2023), 646-63. [Link]

- 41. Mike Thomas. 2022. The future of AI: How artificial intelligence will change the world. (Updated March 2023). Retrieved March 3, 2022 from [Link]

- 42. Tuong-Van Vu, Catrin Finkenauer, Mariette Huizinga, Sheida Novin, and Lydia Krabbendam. 2017. Do individualism and collectivism on three levels (country, individual, and situation) influence theory-of-mind efficiency? A cross-country study. PLOS One 12, 8 (Aug. 2023), e0183011–e0183011. [Link]

- 43. Natalie Wolchover. 2020. Artificial intelligence will do what we ask. That’s a problem. (Jan. 2020). Retrieved March 1, 2022 from [Link]